Methods

The findings in this report are based on quantitative data collected from more than 6,100 responses to an online survey about the information worlds of adults in the U.S. and how their understanding, beliefs, and attitudes about climate change get shaped, and their willingness to take action results.

Data were collected between October 2023 and March 2024 from: (1) an online survey deployed by Qualtrics to a sample of the general population in the U.S. between the ages 16 to 85 years old (N = 4,503), and (2) from a slightly modified version of the same online survey administered to a sample of college students, ages 18 to 35 years old (N = 1,593), enrolled at nine U.S. higher education institutions.

Three sets of questions frame our inquiry:

- What do individuals in the U.S. understand, believe, and feel about climate change? How are their attitudes shaped by the information practices and technologies that mediate their encounters with climate change news and information?

- How do individuals engage with others in their own personal orbit on the subject of climate change? How willing are people to discuss climate change and listen to those with who may hold different views? How much do such interpersonal interactions influence what they know and think about climate change?

- What information practices contribute to being informed about and engaged with the climate crisis and motivated to take action? Which practices contribute to inaction, distrust, ambivalence, hopelessness, and indifference?

Prior to any data collection for this study, a research protocol was submitted to and approved by the Institutional Review Board (IRB) at the University of Washington Tacoma, where the study was based, and at several schools requiring their own IRBs.

Survey Development

PIL team members began the development of our survey instrument with a benchmark analysis of questions previously asked by the Yale Climate Change Opinion Survey,1 the Adult Hope Scale,2 Gallup’s annual climate change polls,3 Pew’s ongoing climate change polls,4 and the Washington Post-Kaiser Family Foundation Climate Change Survey.5

While previous polls have measured respondents’ cultural, generational, and political differences, the purpose of our research was unique: We collected data from a sample of survey respondents to study how they encounter, engage with, and respond to climate change news and information; how these interactions shape their perceptions of the worldwide climate emergency; and how these attitudes impact their willingness to take action. As a follow-up analysis, we explored how these questions pertain to college students for whom climate change has always colored the news and information they’ve encountered throughout their lives.

As the team finalized a draft of the survey instrument, it was reviewed by a survey consultant6 and modifications were made before testing with 63 respondents for internal validity and reliability. Minor changes to the demographics questions and sequencing were made. Both versions of the final survey instrument, one for the general population and the other for college students, had 19 questions.

These questions asked about demographics, climate change beliefs and attitudes, information-seeking behaviors, climate change action, and self-assessments of anxiety and hope about the future of the planet. There were 35 individual statements measured along a Likert opinion scale that we used for mapping climate change understanding in our data analysis phase.

Data Collection

General Population

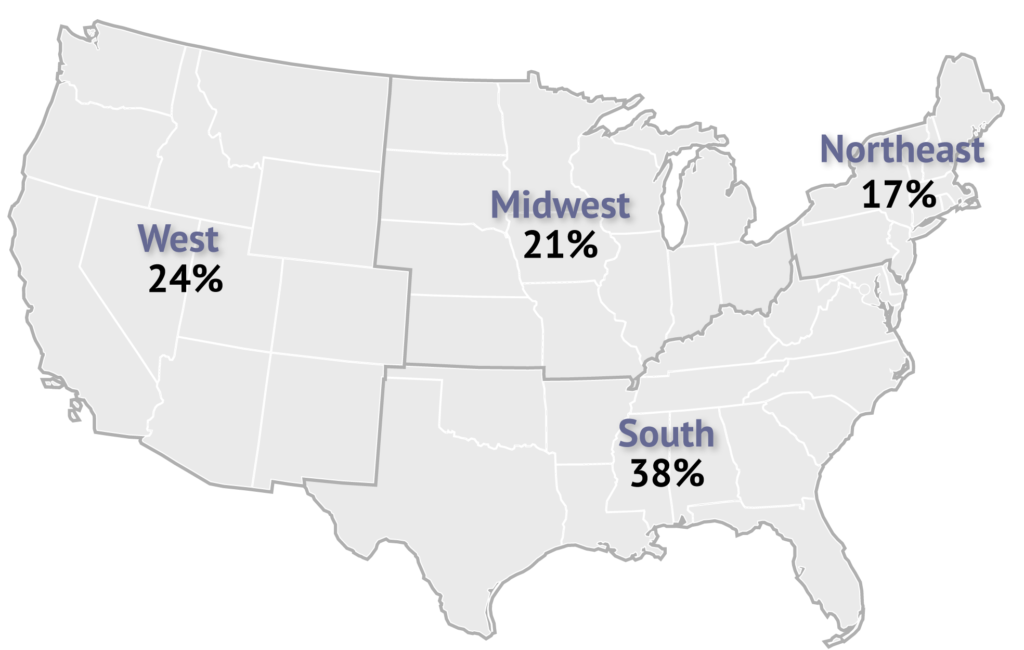

Between October 25, 2023, and November 25, 2023, Qualtrics, the survey software company, was hired to deploy the survey to a panel sample of general public respondents in the U.S. Qualtrics used their existing market research panels for administering our survey, while ensuring a diverse sample based on age, geographic location, educational attainment level, and political affiliation. The survey was pilot tested by Qualtrics before deployment. Qualtrics paid participants a nominal fee for being a part of the survey’s distribution panel. Figure 1 shows the regional breakdown for the general public sample.

The survey was active until a sample of 4,500 high-quality responses was collected. As a step in obtaining this sample size, Qualtrics scrubbed responses by removing submissions with response bias, such as “speeders” who moved too quickly through a survey to be thoughtful in considering their responses. Following this process, the total sample size delivered from Qualtrics was 4,503 respondents.

College Population

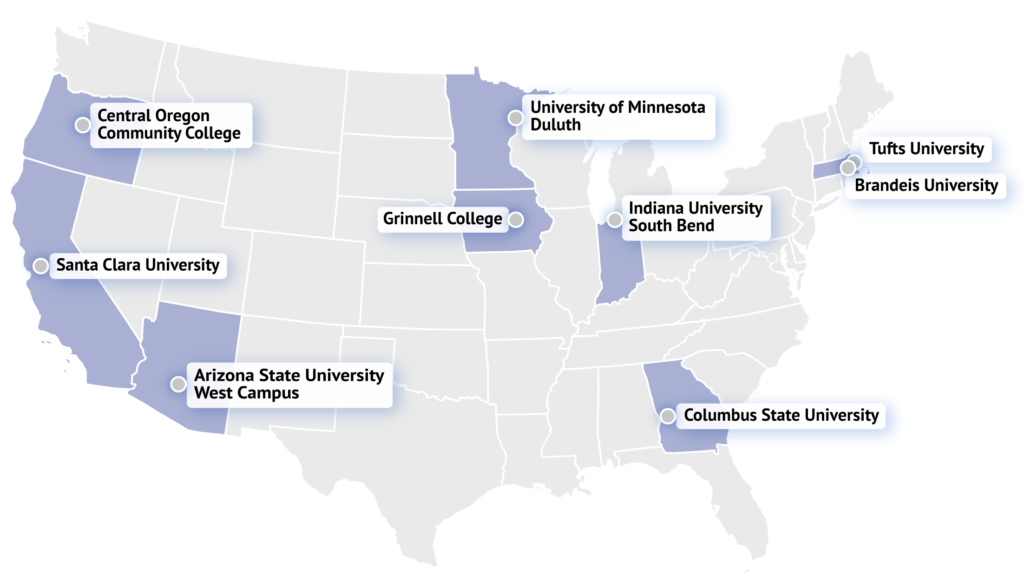

Between January 16, 2024, and March 5, 2024, the PIL team deployed the college survey to full-time credit students between the ages of 18 and 35 enrolled in the current term at nine U.S. two- and four-year colleges and universities. The institutional sample of undergraduates was selected for its institutional type (public and private four-year institutions and a two-year community college) and its diversity of geographic location (see Figure 2).

Email addresses from the Registrar at each institution were used to send students an invitation to take the voluntary survey. Students were sent one reminder seven days later, if they had not yet responded to the first invitation. Each student had an opportunity to voluntarily enter a contest for a $200 Amazon gift card, whether they completed the survey or not. One winner from each institution was randomly selected after the survey closed on their campus. The survey was open for 11 to 13 days on campuses in the sample, depending on whether the deployment date fell on a holiday or weekend. The final count of college students in the sample was 1,593.

Sample Description

General Population Sample

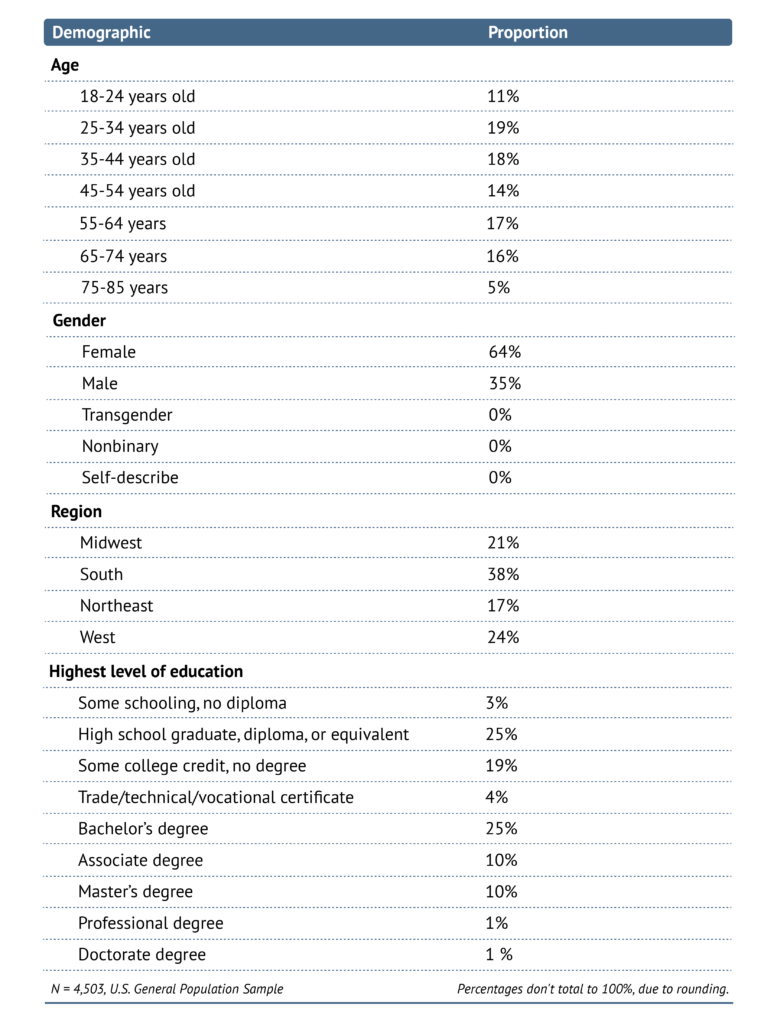

Survey respondents from the general population (N = 4,503) were bracketed into age ranges and geographical regions to ensure varied representation in responses. As is typical of online surveys, more women (64%) responded than men (35%)7. Most of the sample had received at least a high school education or equivalent certification, if not additional credits or degrees. See Table 1 for additional demographic information asked of the general population sample.

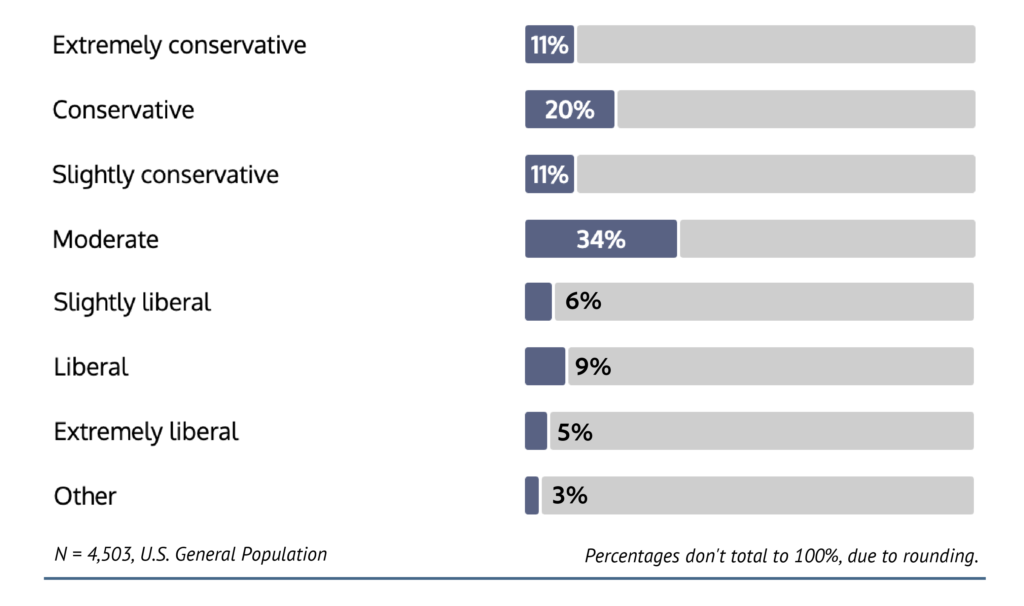

Based on our review of existing climate change polls, the PIL team was aware of the political polarization that can occur around the topic of climate change. Qualtrics’ panels were segmented and monitored to ensure a varied distribution of political identities were represented in the results. See Figure 3 for a breakdown of participants’ self-identified political affiliations.8

College Sample

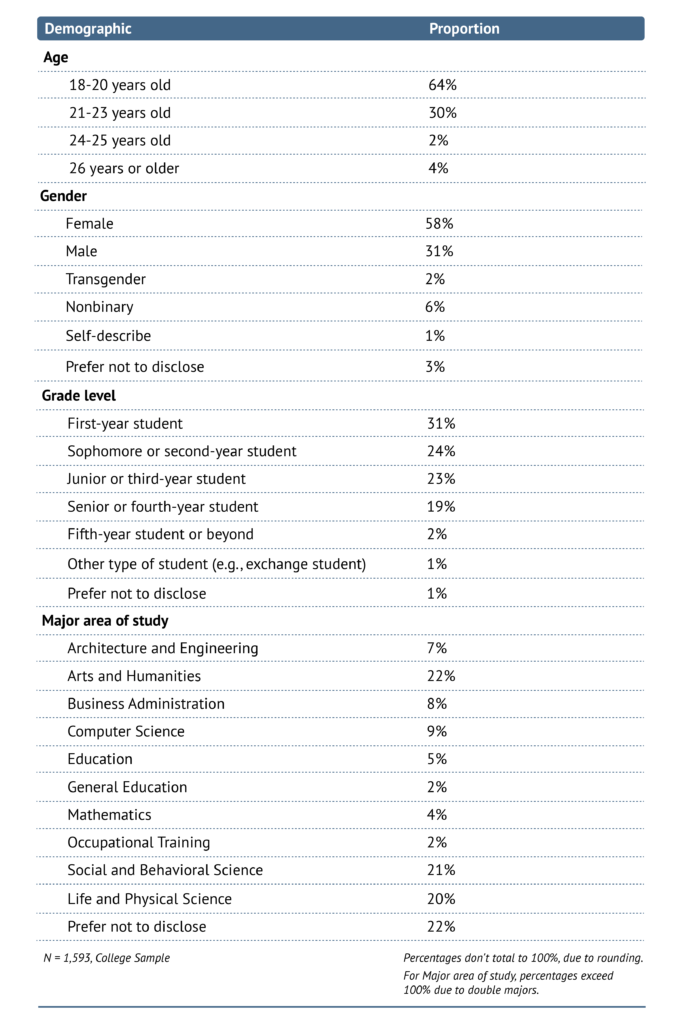

Survey respondents from the college population (N = 1,593) skewed younger than the general population sample but, similarly, two-thirds of the college sample identified as female (58%). Students were enrolled full-time during the winter 2024 term and roughly equal distributions were found in popular majors. See Table 2 for additional demographic information asked of the college sample.

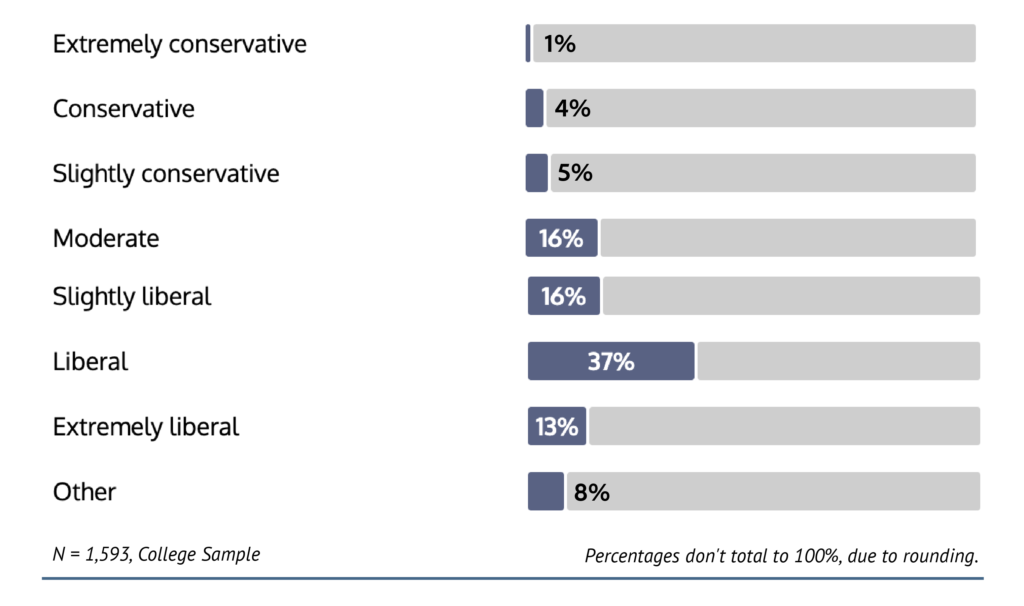

Consistent with responses to prior PIL surveys and representative of the college student population, in general, a larger percentage of the college sample identified as liberal (66%) than the general population (see Figure 4). This trend is consistent with U.S. college students being more liberal, and registered as Democrats, than their counterparts not enrolled in college.9

Data Analysis: Mapping of Climate Understanding

Portions of our survey instrument consisted of a series of opinion questions measured along a Likert scale ranging from “strongly disagree” to “strongly agree.” These questions totaled 35 statements of opinion asked of each respondent, and the responses to these statements were used to cluster respondents based on their affinity. Only data from respondents in the U.S. general population sample were used for the clustering portion of our analysis, since the college sample, both its size and homogeneity, would have skewed results with an overrepresentation of the Engaged cluster at the expense to the other groupings in the analysis.

To conduct the clustering analysis, each respondent’s sequence of responses to the Likert scale questions was transformed into a character-based representation. This representation encoded for each Likert statement, in the order they were presented in the survey, whether the respondent agreed (“A”), disagreed (“D”), were neutral (“N”), or did not know their opinion (“Z”) with respect to the statement. Such sequences were computed for each respondent, after which all pairs of respondents were compared to one another to evaluate the similarity between their sequences of Likert responses.

A simple Hamming distance was used to compute the similarity between any two respondents as a count of the number of differences in their responses to each of the Likert statements. For example, if one respondent in the pair “agreed” with a specific Likert statement and the other respondent “disagreed” with that same statement, this difference in response contributed 1 point of overall difference between the respondents. If both respondents responded the same way to a given statement, this contributed 0 points of overall difference.

Counts of differences between respondents were added together to produce a total value of difference between them, and this value of difference was inversely proportional to the perceived similarity between them. Pairs of respondents with few differences between their sequences were considered high similarity, while pairs of respondents with many differences were considered low similarity.

These computed pairwise similarities between respondents were used to construct a network showing connections between respondents based on the similarity between them. A maximum difference count of seven was used as the threshold for determining if two respondents were connected in the network; this threshold was selected to ensure that if a pair of respondents shared consensus in at least 80% (28 statements) of the Likert scale statements in the survey, they were connected by a link in the network.

Using this resulting network, modularity was computed to identify major communities of clustering across the network. Many iterations of parameterization were conducted for this portion of the analysis to identify a threshold for connections in the network and level of resolution in the modularity analysis that yielded a balance of robust clusters without obscuring important within-cluster communities that bore meaning in our analysis.

The result of this analysis yielded the definitions of our three primary groups discussed in the report: The Engaged, The Detached, and The Resistant. Additional small, localized communities were identified from this clustering that were removed from discussion of the clusters due to within-group abnormalities in response patterns that did not contribute meaningfully to the overall analysis. The colorized terrain graphic presented in the report (See Full Report, Figure 2 “How divided is America over climate change”) is a stylized representation of this underlying network and clustering analysis, and uses density contour estimation to transform the density of the underlying network into a thresholded visual representation that demonstrates the gradient of similarity and affinity across the network.

The heatmaps presented throughout the report use the terrain as a basis on top of which cells of equal area partition the underlying network into subsets of respondents. The extent of agreement or disagreement among these respondents is used to color each cell, resulting in a spatial distribution of opinion across the terrain.

Cluster Analysis of the College Sample

Additional clustering analysis was conducted separately for respondents in the college sample, using the same procedure identified above. The result of this analysis yielded one primary cluster in the college sample, with few meaningful differences within that cluster. This result was consistent with the observation that responses across all survey questions in the college sample demonstrated high consensus, with large proportions of sample participants responding to survey questions in similar ways.

Further investigation showed that the primary pattern of responses reflected in this overall college sample cluster was highly aligned with the primary pattern of responses in the Engaged group of the terrain. In acknowledgment of this, clustering results for the college sample were not included in the initial procedure for generating the definitions of the Engaged, Detached, and Resistant groups, nor were they reflected in the terrain graphic. This decision was made to avoid skewing the distribution of cluster groups in a combined general population plus college sample superset. This would have resulted in an overrepresentation of the Engaged group at the expense of other groups, potentially erasing important within- and across-group differences that were meaningful to our analysis.

Instead, college sample respondents were labeled within the terrain based on their similarity to the major groups in the terrain. To determine the similarity between college sample respondents and the U.S. general population definitions of The Engaged, The Detached, and The Resistant, a two-step procedure was utilized in which the responses of each college sample respondent were first mapped to the closest U.S. general population respondents based on similarity in Likert responses. Then the assignments of those most-similar respondents to the major cluster groups were used as a proxy to assign the college sample respondents to the same groups.

Methodological Limitations

There are challenges associated with any research method. We took steps to avoid or minimize them. To enhance the reliability of this research project’s survey results, the PIL Team pilot-tested the survey instrument with 63 respondents, between the ages of 16 to 80, matching our selection criteria for the general population but who were not eligible for the study sample. The Qualtrics Team pilot-tested the survey instrument for the general population sample before deploying it, too. Based on these pilot tests, we made revisions to the wording and layout of questions before administering the survey, based on the comments from the pilot testers. We shortened the amount of time it took to complete the survey from 10 to 11 minutes to 7 to 8 minutes by improving the layout and flow of the survey and tightening the wording of survey questions.

We fully acknowledge the range of participants represented in our survey, which was designed to be balanced but is skewed in some ways, poses problems for generalizing their experiences to the larger population. We also recognize that parts of our survey were dependent on the time and context in which respondents answered questions. For example, our survey included questions asking respondents about experiences with climate change news and information that they had encountered within the previous two weeks. During the time the survey was being deployed, certain survey participants may have been impacted by the Hawaii wildfires in Fall 2023, droughts and heatwaves across the South and Midwest in Fall 2023, and heatwaves in Winter 2024, among other extreme weather events, thus influencing their attitudes and beliefs about the climate emergency.

For these reasons and others, the PIL findings from this survey are limited. The findings cannot be generalized to a larger population beyond our sample. While fully acknowledging that further research is required to confirm our findings, especially in terms of generalizing to a larger population, the data we have collected, the response rates, and the data analysis applied and reported have shown consistent responses and fairly robust relationships. As such, these data provide a detailed snapshot of our sample and their information worlds that provide directions for further inquiry in qualitative and quantitative studies from a variety of disciplines.

Keywords and Definitions

Climate change is “long-term shifts in temperature and weather patterns that affect people’s health, ability to grow food, housing, safety, and work around the world,” according to the United Nations.10

Extreme weather events include both weather-related and climate-related events: “Weather-related events are shorter incidents such as tornadoes, deep freezes or heat waves. Climate-related events last longer or are caused by a buildup of weather-related events over time, and include droughts or wildfires,” according to the World Economic Forum.11 It is not always clear whether climate change contributed to a particular weather event, though scientists are developing new methods for accurate attribution.12

Flattening effect is the result of a community or geographic location in the U.S. experiencing an extreme weather event that collapses differences in political orientation, religion, income, or age and has the great potential for uniting people in collective action.

Information worlds are collective arrangements of personalized news, information technologies, and social spaces through which people encounter and engage with news and information about the world, which in turn defines their attitudes, beliefs, and understanding of the world, and shape who they are.

Mile markers are significant points in the climate mapping for comparing the information practices, personal belief systems, community alliances, and affinities and differences of our sample. Mile markers are instrumental for revealing how individuals’ information worlds are constructed and further, for describing the technological and social information infrastructures that shape understanding of climate change in America.

News is information resulting from reporting, analysis, and commentary on events happening around the world. While much of what people define as news is packaged in the traditional form of television, radio or newspaper reporting, individuals may encounter news through YouTube videos, through digital and in-person social interactions, through podcasts, or even in the form of memes.13

Climate terrain refers to the depiction of how groups of individuals understand climate change, including their attitudes, experiences and beliefs. A map of the climate change terrain uses an aggregate of individuals’ survey responses to opinion (Likert-based) questions to visually show the extent of group affinities and differences in a sample as well as relationships among groups.

- The Yale Program on Climate Change Communication conducts two surveys per year, with results available only as individual PDFs, see https://climatecommunication.yale.edu/visualizations-data/ycom-us/. ↩︎

- For the Hope Survey questions, see: https://www.pewresearch.org/question-search/. For background, see: C.R. Snyder, S.C. Sympson, F.C. Ybasco, T.F. Borders, M.A. Babyak, and R.L. Higgins, “Development And Validation Of The State Hope Scale,” Personality and Social Psychology 70, no. 2 (1996): 321-335, https://doi.org/10.1037/0022-3514.70.2.321. ↩︎

- Gallup polls Americans on climate change annually in March; this page includes questions and results back to 1980s up to 2023, https://news.gallup.com/poll/1615/Environment.aspx. ↩︎

- We used Pew’s Survey Question search tool to review questions asked between 2021-23. Our search terms were: topic=environment, keyword = climate or warming, https://www.pewresearch.org/question-search/. ↩︎

- “The Washington Post-Kaiser Family Foundation Climate Change Survey, July 9-Aug. 5, 2019, December 9, 2019,” The Washington Post, December 9, 2019, https://www.washingtonpost.com/context/washington-post-kaiser-family-foundation-climate-change-survey-july-9-aug-5-2019/601ed8ff-a7c6-4839-b57e-3f5eaa8ed09f/?itid=lk_inline_manual_151. ↩︎

- Pete Ondish, Ph.D. at the University of Illinois Urbana-Champaign was the survey consultant we hired to review the sequencing of our survey instrument and the clarity of the concepts we were measuring. ↩︎

- Percentages don’t total to 100%, due to rounding. ↩︎

- Totals presented throughout this report may not add up to 100% due to rounding. ↩︎

- Caroline Delbert and Colleen Kilday, “The Most Liberal College In America, According To Students,” Microsoft Start, May 1, 2024, https://www.msn.com/en-us/money/careersandeducation/the-most-liberal-college-in-america-according-to-students-plus-see-the-rest-of-the-top-50/ss-AA1nYLf0. ↩︎

- “What Is Climate Change?” United Nations Climate Action, 2024, https://www.un.org/en/climatechange/what-is-climate-change. ↩︎

- Olivia Rosane, “Extreme Weather 101: Everything You Need To Know,” World Economic Forum, April 11, 2022. https://www.weforum.org/agenda/2022/04/extreme-weather-101-everything-you-need-to-know/. ↩︎

- Ben Clarke and Friederick Otto, “Reporting Extreme Weather And Climate Change: A Guide For Journalists,” World Weather Attribution, https://www.worldweatherattribution.org/wp-content/uploads/ENG_WWA-Reporting-extreme-weather-and-climate-change.pdf. ↩︎

- Alison J. Head, John Wihbey, P. Takis Metaxas, Margy MacMillan, and Dan Cohen, “How Students Engage With News: Five Takeaways For Educators, Journalists, And Librarians,” Project Information Literacy Research Institute, October 16, 2018: 13-17, https://www.projectinfolit.org/uploads/2/7/5/4/27541717/newsreport.pdf. ↩︎